Background

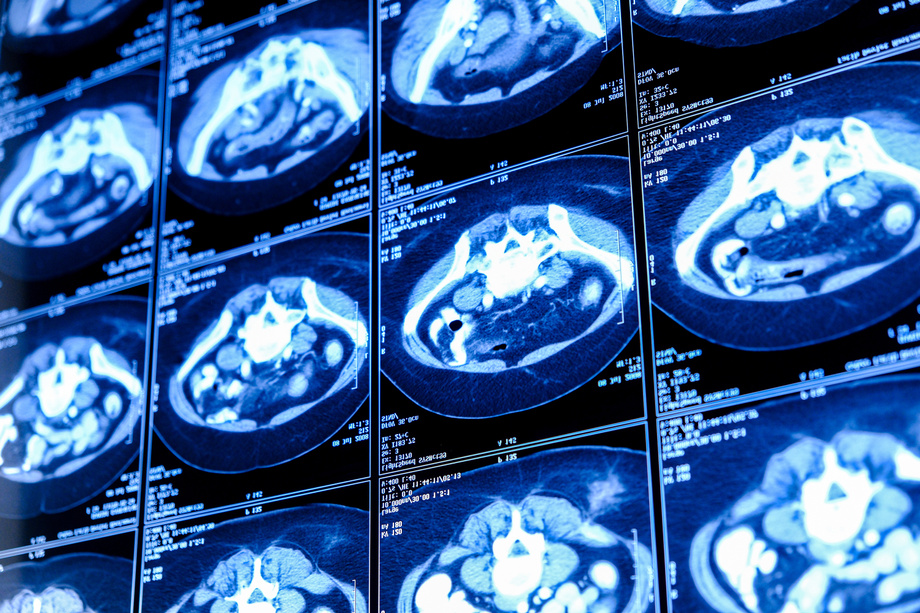

Medical imaging has made significant strides over time, with automatic segmentation emerging as a pivotal component in various medical realms, including diagnosis, treatment planning, and research. This technique involves extracting regions of interest from medical imagery, such as CT scans and MRI, without reliance on manual intervention. The proposed research endeavors to develop a system and methodology for the automated segmentation of multiple organs across diverse modalities of medical imaging.

Technology

Researchers at Stony Brook University (SBU) have developed a novel methodology and system for automating multi-organ segmentation in medical imaging. This approach entails training a neural network conditionally, leveraging annotated medical images to delineate target organ boundaries. Through this process, the model acquires an understanding of spatial relationships among labels, enhancing its capacity for generalization. The base component of this system is a 3D fully-convolutional neural network which is a deep learning architecture enabling AI to respond to input data dynamically, rather than adhering to pre-defined algorithms. The system allows for efficient training for multi‑label segmentation on single‑label datasets.

Advantages

Time‑efficient - Easier to generate - Increased accuracy of segmentation outlines

Application

Annotates imaging parameters in multi‑class image segmentation models derived from single‑label datasets

Inventors

Arie Kaufman, Distinguished Prof. & Chair, Computer Science

Konstantin Dmitriev, PhD Student,

Licensing Potential

Development partner - Commercial partner - Licensing

Licensing Contact

Donna Tumminello, Assistant Director, Intellectual Property Partners, donna.tumminello@stonybrook.edu, 6316324163

Patent Status

Patented

https://patents.google.com/patent/US20220237801A1/en?oq=17%2f614%2c702

Tech Id

050-9020